Science doesn’t lie, but scientists… that’s a different story.

The American Statistical Association recently released a statement reminding scientists what the P value stands for… along with six guiding principles on what determines statistical significance. Why? Because scientists and organizations might be gaming the system to get results published, get funding, or generally advance their career. But before we get into that, let’s explain what they’re messing with – the thing called the P-Value.

It all has to do with how scientific experiments work. As you know, many studies use a control group and an experimental group. The experimental group gets a pill, treatment, or some other thing that scientists want to know more about whereas the control group does not – it’s just there as a comparison. This is where the P value comes in. It helps us determine whether any differences found between the two groups are due to random chance, sampling error, or the actual thing the scientists were testing.

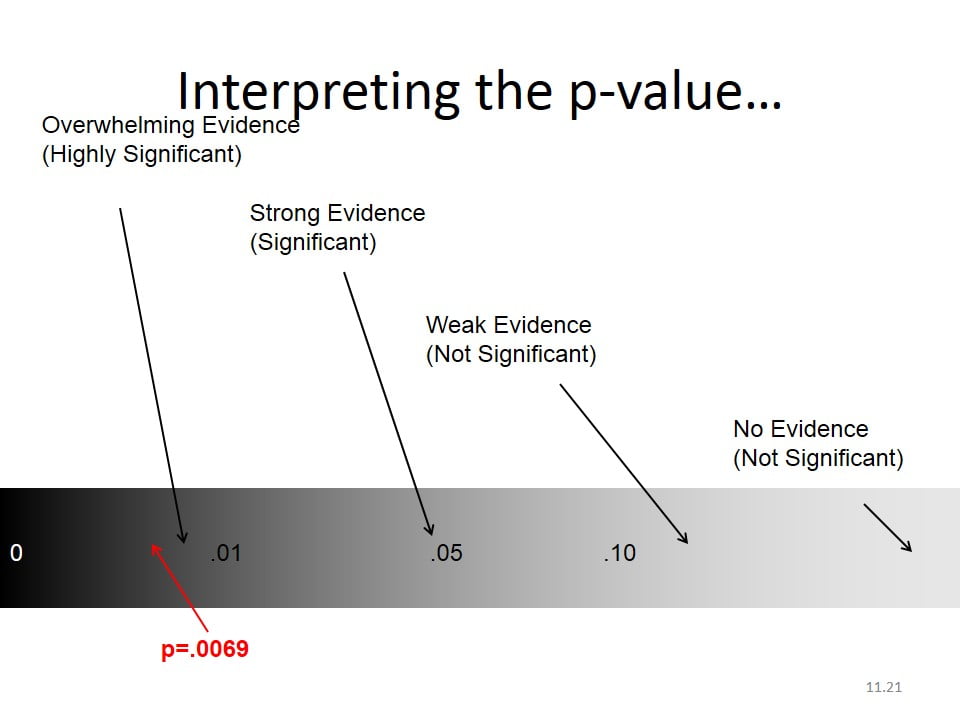

The p-value is the probability that you would get the observed results (or something more extreme) if there is no real difference between groups. It ranges from 0-1, and the general rule seems to be that the lower the P value, the better.

Hypothetically, getting a P-Value of 1 would mean the results are definitely based on something other than what you’re testing. A P-Value of 0 would be the results are definitely based on what you’re testing. But these are the extremes and generally not seen in studies; In practice, anything less than .05 is considered statistically significant.

The problem is there is an overemphasis on P values. The American Statistical Association put out the statement clarifying the P value because as the ASA’s executive director, Ron Wasserstein remarks, “The p-value was never intended to be a substitute for scientific reasoning.”

This is a big deal because it’s having serious consequences in our knowledge and understanding. It means we might be neglecting important and crucial areas of research. In practice, it could mean we have a decreased understanding of the true effect of a treatment or medication… Because the overemphasis on P value often leads to the neglect of other information in studies – such as effect size.

If you’re a drug manufacturer, and you have a hair growth drug that you want to market. You conduct a study, and it comes up with a P value of .05. Great. It means that there is a small probability that an outside force was responsible for the hair growth. So you’ll buy that pill, right? But hold on. What was ignored was the effect size. What the P value is not telling us is that those bald guys only grew two hairs and not a full, luscious mane. So, technically, they’re still bald. This is part of the problem. We shouldn’t be putting all our faith in this one number. But scientists often are, so much so that they might be skewing their experiments to get lower Ps.

Just like teachers teach to the test, scientists design experiments to get the lowest possible P at the expense of solid scientific reasoning and investigation. Or after their experiment is conducted, they select the specific analysis methods that are more likely to lead to statistically significant results and/or cherry pick which results to include or leave out.

So, why are scientists doing this? Well, that, of course, cannot be answered with certainty; but, it is telling that statistically significant effects are much more likely to be published. Researchers Sullivan and Feinn found that the number of studies published that contain P values in their abstracts has doubled from 1990 to 2014, and of those studies that included a P value, 96% were below .05. Getting published can play a huge role in career advancement…in the halls of academia, it’s either “Publish or Perish.” So the Association’s recent statement is more than just about statistical analysis – more than just about reproducibility and replication. It’s about how and what the scientific community values, redefining these and moving away from a binary system of statistical significance to one that views evidence on a continuum.

What is something you’d love to research? Or have us research for you? Let us know in the comments!